The way facial recognition software works is by scanning your face and then scraping online databases for photographs that have a positive match. This includes searching all public photos that exist on social media, along with the rest of the internet, but how much online public data is searched comes down to what each individual company believes is appropriate when developing its software.

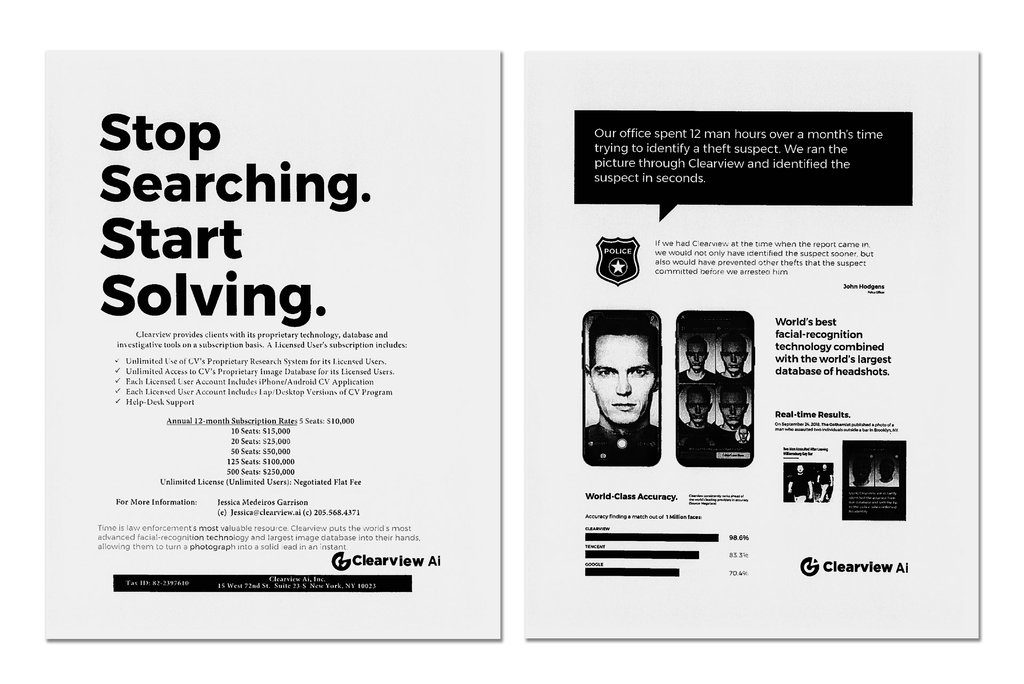

One particular company in America has now pushed these boundaries to their limits. A recent report from the New York Times revealed that federal and state law enforcement officers have been using a newly developed facial recognition app by a secretive start-up company called Clearview AI. Its software goes far beyond anything ever constructed by the United States government or Silicon Valley giants by scraping more than three billion images from Facebook, YouTube, Venmo, along with millions of other websites, making the technology is incredibly accurate. The New York Times reporter found it could even identify them with over half their face covered.

Amr Alfiky for The New York Times

Image

Amr Alfiky for The New York Times

Image

The company has provided it to hundreds of law enforcement agencies to help them match photos of unknown people to their online images in what some would argue is a big win for those trying to implement the law. However, the possible ethical considerations such powerful technology poses is cause for concern.

Federal and state law enforcement officers said that while they had only limited knowledge of how Clearview works and who is behind it but they had still used the app to help solve shoplifting, identity theft, credit card fraud, murder and child sexual exploitation cases. The company Clearview has also licensed the app to at least a handful of companies for security purposes.

Most often, advocates of this technology argue its potentially game-changing usefulness for those in law enforcement and working for in security to identify criminals or trespassers. But this has always been highly dependant on how accurate, and reliable, the technology is at finding a correct positive match. In the recent past there has been a very low success rate with developing software that’s accurate enough. South Wales police trailed it in 2018 which was evaluated by Cardiff University who found it to have a 90% false positive rate, meaning it thinks it has found a match when it hasn’t. Likewise there is also the possibility of false negatives where the technology does not pick up on a match even though there is one in the database.

In particular in the past it has been found to lack in an ability to differentiate between black individuals and other ethnic minorities. Research by the US National Institute of Standards and Technology in December 2019 examined facial recognition systems provided by 99 tech developers around the world. They found that people from ethnic minorities were 100 times more likely to be misidentified than white individuals. Such a high level of incorrect results when used, particularly among entire groups of people, is more than problematic. It clearly indicates how easily it would lead to people being wrongly harassed and could result in racial discrimination when attempting to identify a suspect.

Computer science Professor Dean Chadwick, from the University of Kent, says, “In the most extreme cases you could imagine that the police could say ‘you were at this crime scene, you did this crime, we have recognised you’ and it could be completely wrong and it wasn’t you.”

With the release of Clearview’s app, even if this issue is no longer the case due to its high levels of accuracy this only raises further concerns. No longer of whether the technology does in fact work, but if or how it should be used.

Professor Chadwick explains, “what you need to understand is that no technology is perfect and face recognition is only as good as the matching algorithm.” He adds that all facial recognition uses a matching algorithm, and all of them can suffer from false positives and false negatives but, “now as systems get better and the algorithms get better, they’re clearly going to get better until they can navigate to a 99% correct rate, and then it becomes a real challenge to society because we haven’t given our consent and that’s a problem.”

“If you think about the traditional uses of fingerprints or dna the police only take those when they suspect you of a crime or at a crime scene so the database is limited. But with face recognition you can collect pictures from all over the internet, we’re giving our pictures all the time so if people are harvesting them they’ve got a massive database without us consenting to it.”

The ability for facial recognition tech has been around for a while now, but many tech companies have held back on developing or releasing it because of the ethical implications. This is because the tool in the wrong hands and with ill intent, could identify activists at a protest or an attractive stranger on the subway, revealing not just their names but where they lived, what they did and who they knew. It’s not only a huge intrusion on personal privacy but could seriously threaten people’s individual safety. Perhaps most concerning is that both police officers and Clearview’s investors predict that the app will eventually be available to the public.

Professor Chadwick agrees with this prediction, saying, “any company can buy the software and of course they will be selling it, like the American company that’s going to be wanting to make a profit out of it so it’s going to want lots of people to buy its software and to use its database and they’ll sell that at a profit.”

He gives an example of a company who went to a dating website and it picked up the pictures of people who hadn’t given their real names on the website but it identified them and revealed their real names. “People who are wanting to behave in a private manner were actually outed because of face recognition.”

If this type of power was freely accessible to the public this would clearly endanger many individuals, putting them at risk from sexual harassment, stalkers, and in the most extreme but still possible cases, rapists and sex trafficking organisations.

Because of this, Professor Chadwick argues that there needs to be further public debate. “This is always the case, technology rushes ahead of the law, and so it would be good I think to do a pause just so the politicians and lawmakers can catch up with it and there can be a public debate where we can decide as a nation, even as a world, what we want.”

Only last September politician David Davis called for a halt to facial recognition trails to give time for parliament to debate it, saying, “the issue with all these technologies is they are used in a completely ungoverned space. There are ‘guidelines’ on their use. But nothing in the way of strict regulations with a statutory backing. ”

He added, “the time to act on this is now while the technology is not as entrenched as other tools available to forces. If the government drags their feet on this, they will be left playing catch up.”

The European Commission is currently considering a five year ban on the use of facial recognition in public areas to give regulators time to work out how to prevent or at least safeguard the technology from being abused. However, exceptions to the ban could be made for security projects as well as research and development.

Professor Chadwick adds, “there are always going to be states that are going to want to control their citizens but in the free west there should be a public debate so that we can decide what we want this technology to be used for in a legal manner.”

Be the first to comment on "New developments in facial recognition tech causes widespread concern over its use on the public and the ethical implications on privacy and safety."